An article in Science Magazine

published mid-January describes a non-trivial method of snooping on smartphone users through an ambient light sensor. All smartphones and tablets have this component built-in — as do many laptops and TVs. Its primary task is to sense the amount of ambient light in the environment the device finds itself in, and to alter the brightness of the display accordingly.

But first we need to explain why a threat actor would use a tool ill-suited for capturing footage instead of the target device’s regular camera. The reason is that such “ill-suited” sensors are usually totally unprotected. Let’s imagine an attacker tricked a user into installing a malicious program on their smartphone. The malware will struggle to gain access to oft-targeted components, such as the microphone or camera. But to the light sensor? Easy as pie.

So, the researchers proved that this ambient light sensor can be used instead of a camera; for example, to get a snapshot of the user’s hand entering a PIN on a virtual keyboard. In theory, by analyzing such data, it’s possible to reconstruct the password itself. This post explains the ins and outs in plain language.

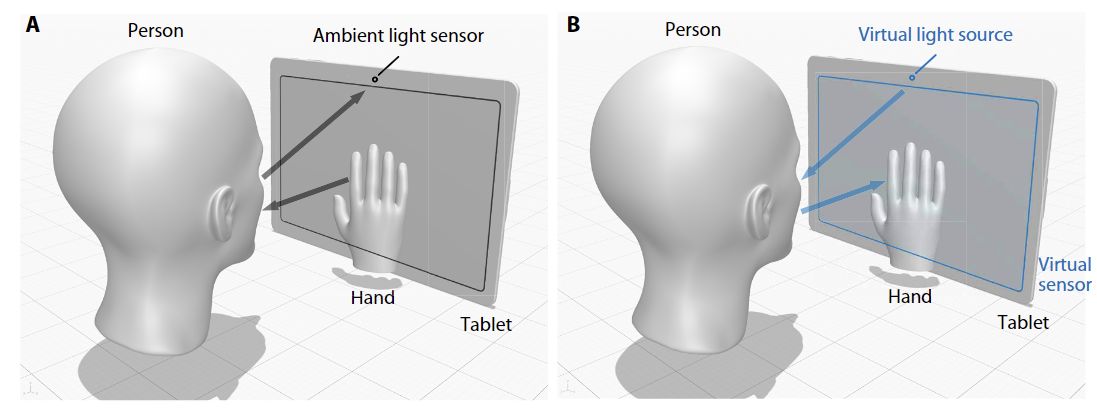

“Taking shots” with a light sensor. Source

A light sensor is a rather primitive piece of technology. It’s a light-sensitive photocell for measuring the brightness of ambient light several times per second. Digital cameras use very similar (albeit smaller) light sensors, but there are many millions of them. The lens projects an image onto this photocell matrix, the brightness of each element is measured, and the result is a digital photograph. Thus, you could describe a light sensor as the most primitive digital camera there is: its resolution is exactly one pixel. How could such a thing ever capture what’s going on around the device?

The researchers used the Helmholtz reciprocity principle, formulated back in the mid-19th century. This principle is widely used in computer graphics, for example, where it greatly simplifies calculations. In 2005, the principle formed the basis of the proposed dual photography method. Let’s take an illustration from this paper to help explain:

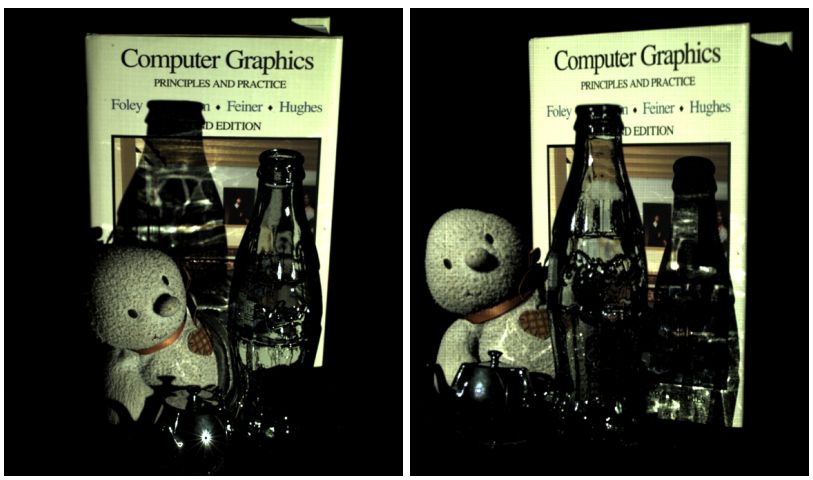

On the left is a real photograph of the object. On the right is an image calculated from the point of view of the light source. Source

Imagine you’re photographing objects on a table. A lamp shines on the objects, the reflected light hits the camera lens, and the result is a photograph. Nothing out of the ordinary. In the illustration above, the image on the left is precisely that — a regular photo. Next, in greatly simplified terms, the researchers began to alter the brightness of the lamp and record the changes in illumination. As a result, they collected enough information to reconstruct the image on the right — taken as if from the point of view of the lamp. There’s no camera in this position and never was, but based on the measurements, the scene was successfully reconstructed.

Most interesting of all is that this trick doesn’t even require a camera. A simple photoresistor will do… just like the one in an ambient light sensor. A photoresistor (or “single-pixel camera”) measures changes in the light reflected from objects, and this data is used to construct a photograph of them. The quality of the image will be low, and many measurements must be taken — numbering in the hundreds or thousands.

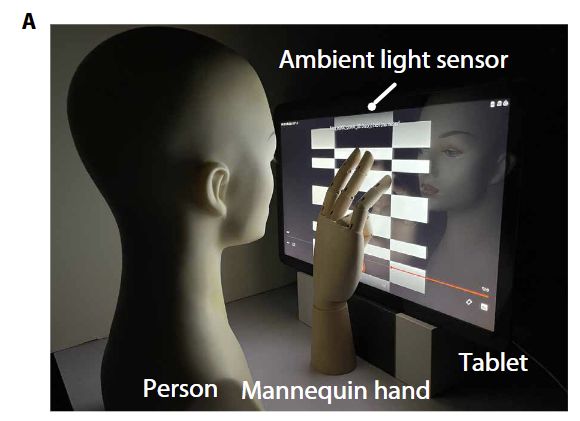

Experimental setup: a Samsung Galaxy View tablet and a mannequin hand. Source

Let’s return to the study and the light sensor. The authors of the paper used a fairly large Samsung Galaxy View tablet with a 17-inch display. Various patterns of black and white rectangles were displayed on the tablet’s screen. A mannequin was positioned facing the screen in the role of a user entering something on the on-screen keyboard. The light sensor captured changes in brightness. In several hundred measurements like this, an image of the mannequin’s hand was produced. That is, the authors applied the Helmholtz reciprocity principle to get a photograph of the hand, taken as if from the point of view of the screen. The researchers effectively turned the tablet display into an extremely low-quality camera.

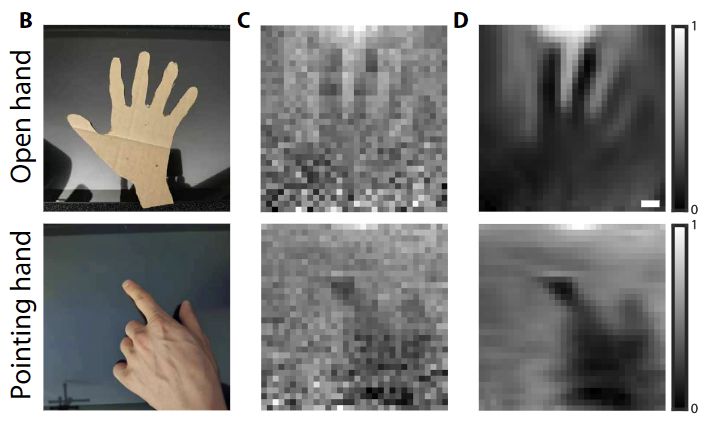

Comparing real objects in front of the tablet with what the light sensor captured. Source

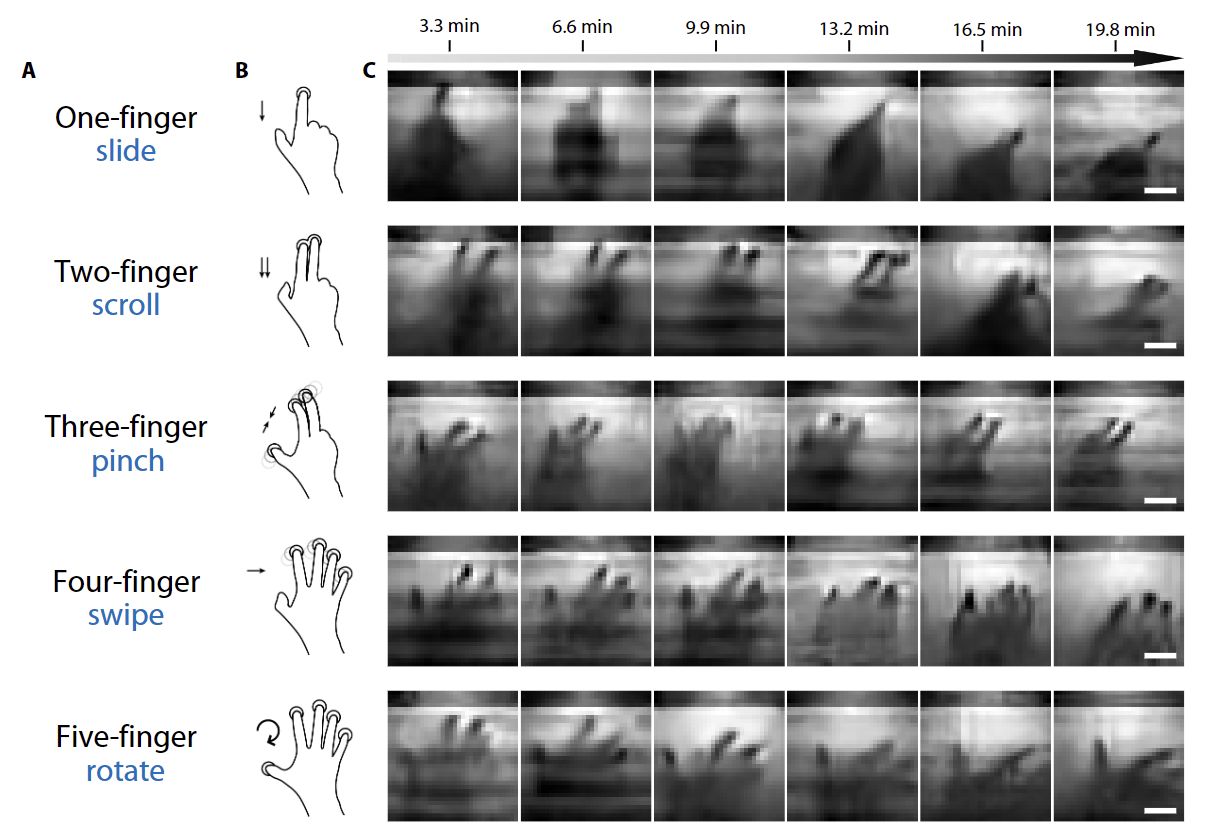

True, not the sharpest image. The above-left picture shows what needed to be captured: in one case, the open palm of the mannequin; in the other, how the “user” appears to tap something on the display. The images in the center are a reconstructed “photo” at 32×32 pixel resolution, in which almost nothing is visible — too much noise in the data. But with the help of machine-learning algorithms, the noise was filtered out to produce the images on the right, where we can distinguish one hand position from the other. The authors of the paper give other examples of typical gestures that people make when using a tablet touchscreen. Or rather, examples of how they managed to “photograph” them:

Capturing various hand positions using a light sensor. Source

So can we apply this method in practice? Is it possible to monitor how the user interacts with the touchscreen of a tablet or smartphone? How they enter text on the on-screen keyboard? How they enter credit card details? How they open apps? Fortunately, it’s not that straightforward. Note the captions above the “photographs” in the illustration above. They show how slow this method works. In the best-case scenario, the researchers were able to reconstruct a “photo” of the hand in just over three minutes. The image in the previous illustration took 17 minutes to capture. Real-time surveillance at such speeds is out of the question. It’s also clear now why most of the experiments featured a mannequin’s hand: a human being simply can’t hold their hand motionless for that long.

But that doesn’t rule out the possibility of the method being improved. Let’s ponder the worst-case scenario: if each hand image can be obtained not in three minutes, but in, say, half a second; if the on-screen output is not some strange black-and-white figures, but a video or set of pictures or animation of interest to the user; and if the user does something worth spying on… — then the attack would make sense. But even then — not much sense. All the researchers’ efforts are undermined by the fact that if an attacker managed to slip malware onto the victim’s device, there are many easier ways to then trick them into entering a password or credit card number. Perhaps for the first time in covering such papers (examples: one, two, three, four), we are struggling even to imagine a real-life scenario for such an attack.

All we can do is marvel at the beauty of the proposed method. This research serves as another reminder that the seemingly familiar, inconspicuous devices we are surrounded by can harbor unusual, lesser-known functionalities. That said, for those concerned about this potential violation of privacy, the solution is simple. Such low-quality images are due to the fact that the light sensor takes measurements quite infrequently: 10–20 times per second. The output data also lacks precision. However, that’s only relevant for turning the sensor into a camera. For the main task — measuring ambient light — this rate is even too high. We can “coarsen” the data even more — transmitting it, say, five times per second instead of 20. For matching the screen brightness to the level of ambient light, this is more than enough. But spying through the sensor — already improbable — would become impossible. Perhaps for the best.

smartphones

smartphones

Tips

Tips